🚗 Building a TinyML Object Detection Model for Autonomous Mobile Robots

Real-time object detection on a microcontroller-powered robot.

Real-time object detection on a microcontroller-powered robot.Introduction

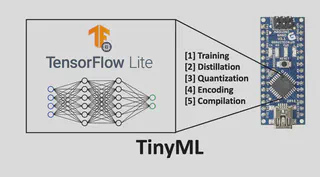

Tiny Machine Learning (TinyML) enables the deployment of machine learning models on microcontrollers and other resource-constrained devices. This capability is particularly beneficial for autonomous mobile robots that require real-time object detection without relying on cloud connectivity.

In this guide, we’ll walk through the complete lifecycle of developing a TinyML model for object detection: from conceptualization and data collection to model training, optimization, and deployment.

1. Define the Problem

For autonomous mobile robots, real-time object detection is crucial for navigation and obstacle avoidance. Our goal is to enable a robot to detect and classify objects in its path using onboard processing.

Example Use Case:

- Application: A delivery robot navigating indoor environments.

- Objective: Detect obstacles like furniture or humans to avoid collisions.

2. Data Collection

Collect a diverse dataset of images representing the objects the robot should detect (e.g., pedestrians, other vehicles, obstacles) under various lighting and environmental conditions. Tools like Edge Impulse facilitate data collection and labeling directly from connected devices.

Steps:

- Capture Images: Use the robot’s onboard camera to capture images in different environments.

- Label Data: Annotate images with bounding boxes around target objects.

- Augment Data: Apply transformations like rotation and scaling to increase dataset diversity.

Reference:

3. Model Training

Train a lightweight convolutional neural network (CNN) suitable for microcontrollers. Models like MobileNetV2 or custom architectures optimized for size and speed are ideal. Training can be performed using frameworks such as TensorFlow or PyTorch.

Approach:

- Transfer Learning: Utilize pre-trained models and fine-tune them on your dataset.

- Model Architecture: Choose architectures like MobileNetV2 SSD FPN-Lite for bounding box detection or FOMO for centroid-based detection.

Reference:

4. Model Optimization

Optimize the trained model to fit the constraints of microcontroller hardware:

- Quantization: Convert 32-bit floats to 8-bit integers to reduce model size and inference time.

- Pruning: Remove redundant connections in the network to decrease complexity.

- Conversion: Use TensorFlow Lite to convert the model into a format suitable for microcontrollers.

These techniques are discussed in detail in TinyML Optimization Approaches.

5. Deployment

Deploy the optimized model to the robot’s microcontroller (e.g., ESP32, STM32). Use platforms like Edge Impulse or TensorFlow Lite for Microcontrollers to facilitate deployment. Ensure the model runs efficiently and meets real-time processing requirements.

Steps:

- Model Conversion: Convert the optimized model to a format compatible with the target microcontroller.

- Firmware Integration: Integrate the model into the robot’s firmware.

- Testing: Validate the model’s performance in real-world scenarios.

6. Testing and Validation

Test the deployed model in real-world scenarios to validate its performance. Assess accuracy, latency, and power consumption. Iterate on the model and optimization steps as needed to meet the application’s requirements.

Metrics to Evaluate:

- Accuracy: Percentage of correctly detected objects.

- Latency: Time taken for the model to process an image.

- Power Consumption: Energy usage during model inference.

Conclusion

Developing a TinyML model for object detection on autonomous mobile robots involves a comprehensive process of data collection, model training, optimization, and deployment. By following these steps, developers can create efficient, real-time object detection systems that operate entirely on-device, enabling smarter and more autonomous robots.

References

- TinyML Optimization Approaches for Deployment on Edge Devices

- Edge Impulse: An MLOps Platform for Tiny Machine Learning

- TinyML Made Easy: Object Detection with XIAO ESP32S3 Sense

- https://www.unite.ai/tinyml-applications-limitations-and-its-use-in-iot-edge-devices/

Feel free to reach out if you have any questions or need further assistance with TinyML development!